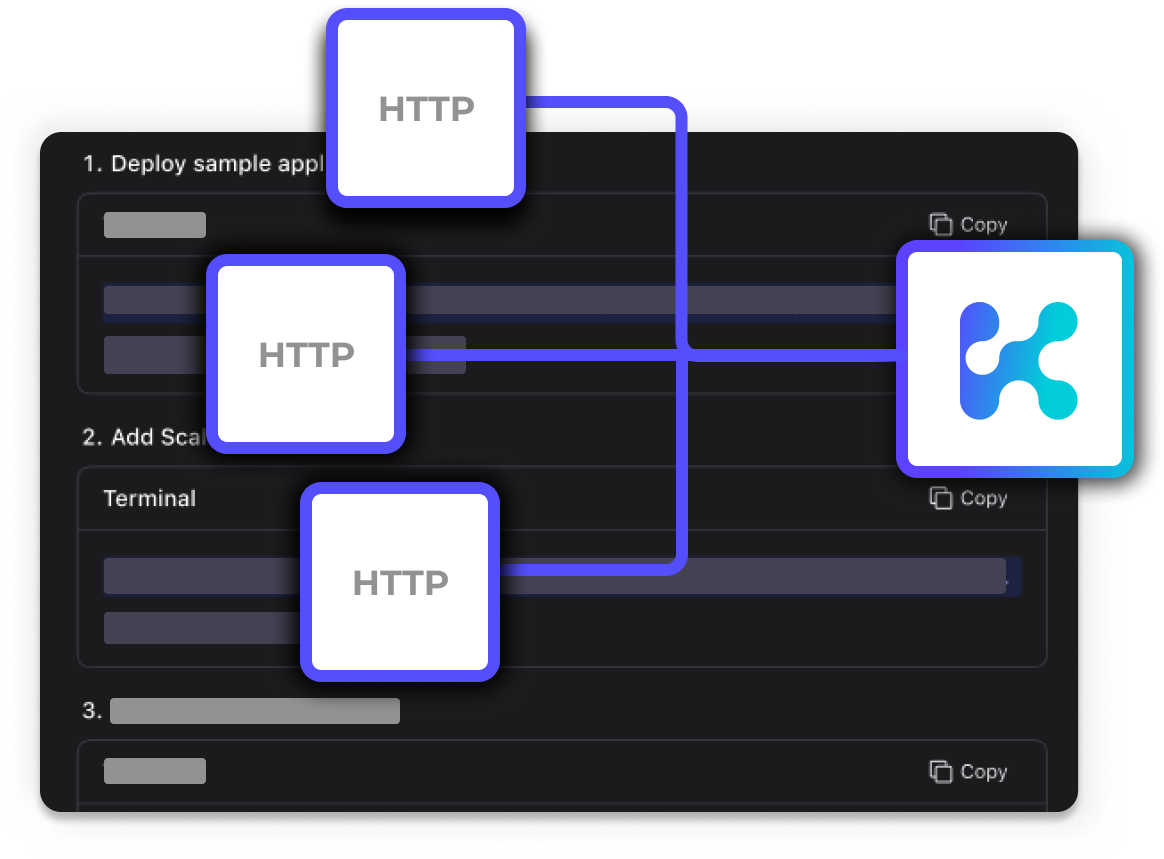

Use HTTP metrics to trigger autoscaling with Kedify and KEDA

HTTP-based autoscaler dynamically scales Kubernetes workloads based on real-time HTTP traffic metrics, including scale down to zero.

Book demo

Overview of Kedify's HTTP Scaler

The HTTP Scaler is a production-ready solution designed to deliver unmatched performance, stability, and ease of use for scaling based on HTTP traffic in Kubernetes environments. Built on top of the KEDA HTTP Add-On, the Kedify HTTP Scaler incorporates significant improvements that enhance stability and performance.

Key Features

-

1

Automatic Network Traffic Wiring:

Simplifies configuration by automating necessary Ingress reconfigurations.

-

2

Fallback Mechanism:

Ensures continuous availability by rerouting traffic during control plane issues.

-

3

Built-In Health Checks:

Maintains proper health statuses for applications scaled to zero.

-

4

gRPC and Websockets Support:

Enables autoscaling based on gRPC and Websockets, including scale to zero.

-

5

Performance and Envoy Integration:

Leverages the Envoy proxy for superior performance and reliability.

Compare the Kedify HTTP Scaler and the KEDA Community HTTP Add-On

Kedify HTTP Scaler Types

kedify-http: Offers full autowiring and fallback support ( documentation

).

kedify-envoy-http: Utilizes an existing Envoy proxy and scales from 1 replica with limited autowiring and fallback capabilities ( documentation

).

Learn More

How To: Step-by-step guides for configuring HTTP scaling in various scenarios: How To Guides

Tutorial: In-browser, step by step guide on how to use the HTTP Scaler: HTTP Scaler Tutorial

Quickstart: A step by step guide using local Kubernetes cluster:

HTTP Scaler Quickstart

Featured Use Cases

Scenario:

Scale out a specific service that is only needed during sales events and peak traffic periods on demand. This service does not have to be running all the time, therefore we can save resources.

HTTP Scaler Usage:

KEDA Usage:

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: ecommerce

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: ecommerce-service

minReplicaCount: 0

maxReplicaCount: 10

triggers:

- type: kedify-http

metadata:

hosts: ecommerce-service.company.com

service: ecommerce-service

port: "8080"

scalingMetric: requestRate

targetValue: "100"