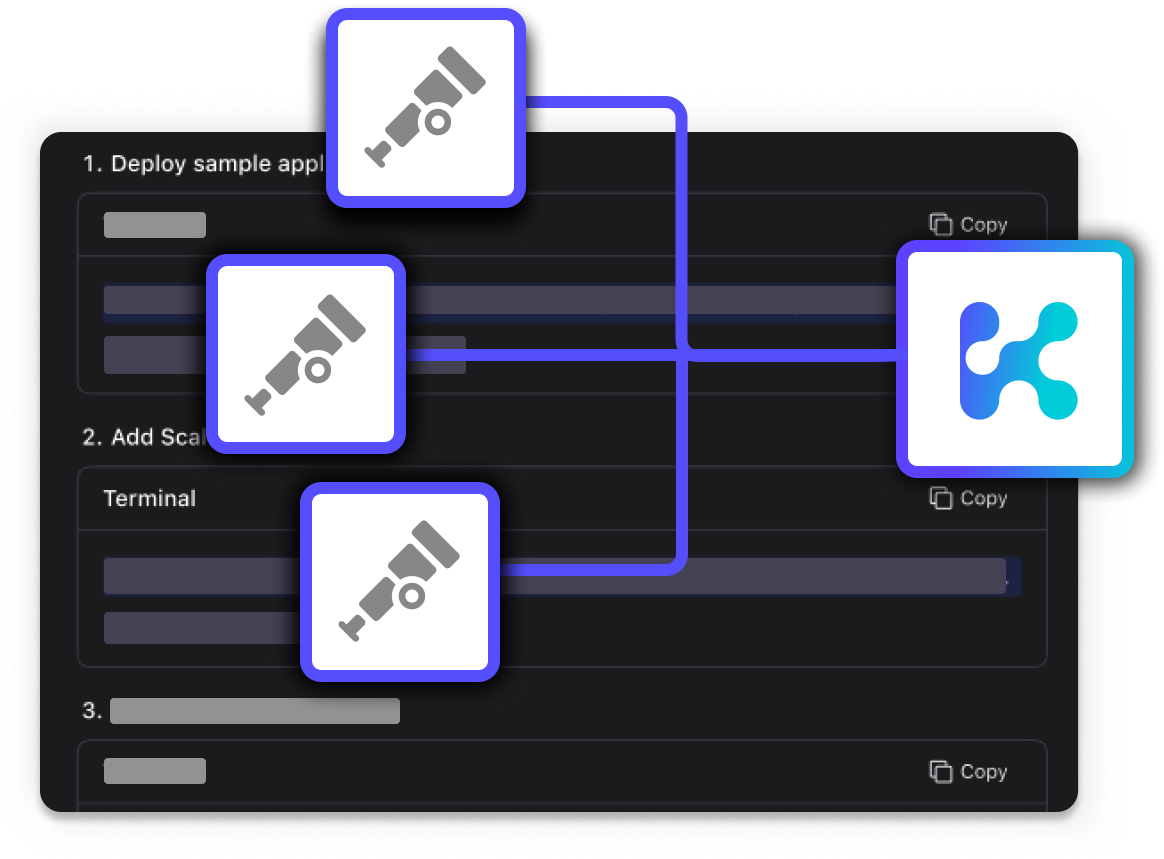

Use OpenTelemetry metrics to trigger autoscaling with Kedify and KEDA

Autoscaler for Kubernetes workloads based on real-time metrics from Prometheus, OpenTelemetry, and other compliant sources.

Book demo

Overview of OpenTelemetry Scaler in Kedify

The OpenTelemetry (OTEL) Scaler is designed to enable precise, data-driven scaling for Kubernetes workloads. Using OpenTelemetry, it can capture a wide range of metrics, allowing the Kedify Scaler to adjust resources dynamically and improve response times while conserving costs. It utilizes a push-based approach for metrics, providing significant advantages over traditional pull-based models like Prometheus.

Key Features

-

1

Real-Time Metric Collection:

Gathers metrics in real time, enabling timely scaling based on traffic demand.

-

2

Wide Metric Range:

Supports a variety of metrics such as request rates and concurrency for granular scaling configurations.

-

3

No Need for Prometheus Server:

This approach eliminates the need to deploy a Prometheus server, reducing infrastructure overhead and resource usage.

-

4

Faster Response Times:

With a push-based model, metrics are sent directly to KEDA, minimizing delays that are typical in scrape intervals, thus allowing faster scaling responses.

-

5

Flexible Integration Options:

OpenTelemetry’s support for multiple protocols and integrations enables streamlined observability setups across diverse environments, with minimal configuration.

Learn More

- Documentation: Kedify OTEL scaler documentation

.

- Migrate from Prometheus Scaler: Follow our guide on migrating from Prometheus to OpenTelemetry scaler

.

- How To: Check out our how to use OpenTelemetry scaler

guide.

- GitHub Repository: Explore the source code and examples at Kedify OTEL add-on repository

.

- For more details about the scaler, see our blog post.

Featured Use Cases

Scenario:

Scale AI/ML training workloads dynamically based on metrics such as tokens per minute and GPU memory usage. A Prometheus server is not used to ensure real-time scaling that adapts to intensive computational loads.

OpenTelemetry Scaler Usage:

KEDA Usage:

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: ai-training

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: ai-training-service

minReplicaCount: 1

maxReplicaCount: 15

triggers:

- type: kedify-otel

metadata:

metricQuery: 'avg(model_training_tokens{model=my_model, job=training})'

operationOverTime: 'rate'

targetValue: '500'

- type: kedify-otel

metadata:

metricQuery: 'avg(gpu_memory_usage{model=my_model, job=training})'

targetValue: '800'