Kedify Achieves SOC 2 Type II Certification!

Learn more about our commitment to security

Using metrics from OTEL collector for scaling

by Jirka Kremser, Kedify

October 31, 2024

Intro

KEDA provides numerous ways to integrate with external systems. All these available scalers have one thing in common - metrics.

One of the most general scalers KEDA ecosystem provides is the Prometheus scaler

where one can use a PromQL to define

the metric that will be used for making scaling decisions.

In this blog post we will describe a similar brand new scaler that can smoothly integrate with OpenTelemetry collector so that KEDA can react more quickly and you actually don’t have to have Prometheus server running.

Prometheus Scaler

In traditional Prometheus & Grafana setup, you configure Prometheus server to scrape various targets. Either dynamically using Prometheus operator and its CRDs or statically in the

Prometheus config. Part of this configuration is also the scrape interval denoting how ofter Prometheus should go and scrape the metrics endpoints. It defaults to one minute and for devops it’s been

always a tradeoff between good enough resolution for metrics and monitoring resources overhead (CPU & memory).

KEDA when using this scaler also adds some timeout to the picture when polling the metrics from the scaler.

So in the worst case scenario the time required for KEDA to start scaling is ${prom_scrape_interval} + ${keda_polling_interval}. Not speaking of the delay that some exporters may introduce themselves and also not speaking

about Prometheus’ delay to process the metric query and return the result.

Common KEDA & Prometheus interaction:

In general, the signals are propagated slower in the pull-based model than in push-based model of communication. Prometheus ecosystem comes with push gateway and also one can ingest metrics to prometheus using the remote write

protocol.

However, the first approach is more suitable for short running tasks that may miss the regular scrape and at the end of a day it all ends up again in pull model. As for the remote write it’s quite complex to write any clients and was rather designed for

TSDBs to exchange data.

Luckily we can leverage the OpenTelemetry (OTEL) standard and their OTEL collector

.

OpenTelemetry

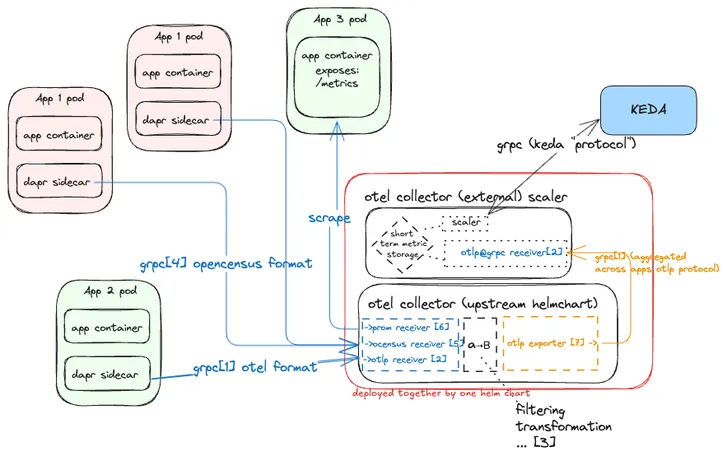

OTEL is an umbrella project that aims on all things related to observability. For our purposes we will neglect the logs and traces and focus merely on metrics. OTEL collector is a tool that promises “vendor-agnostic implementation of how to receive, process and export telemetry data”. It can form also pipelines (or even DAGs) when one collector can feed another(s).

I’ve seen architectures where it is deployed as a sidecar container on each workload’s pod as well as dedicated deployments running as DaemonSet or cross cluster pipelines. On the receiving part of the

collector, there are multiple implementations of so called receivers. Then the signal (metric, logs, traces) are processed by processors.

Again, vast amount of options

is available. Finally at the end it can

be sent to one or multiple parties using exporters

.

All in all, it allows for various forms of integration with the majority of monitoring tools and it became a de-facto standard for both shape of the telemetry data and protocols that are being used.

Following the hub and spoke paradigm, if we allow KEDA to integrate with OTEL collector, we can have all those community receivers, processors and exporters

as our building blocks to build a monitoring “cathedral”.

Let us introduce KEDA OTEL Scaler Add On to you!

The OTEL Scaler

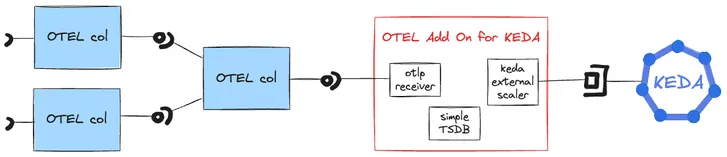

The Kedify OTEL Scaler is an add-on to KEDA that integrates seamlessly with OpenTelemetry (OTEL) through the OTLP receiver and connects with KEDA via gRPC, following the external scaler contract

.

On the receiving end, the OTEL Scaler temporarily stores incoming metric data in short-term storage with a default retention window of one minute to capture trend data effectively—this duration is fully configurable.

This setup enables the creation of ScaledObject or ScaledJob resources with the kedify-otel trigger type (for more details, please see the scaler documentation).

The trigger metadata supports a metric query similar to PromQL, which the scaler evaluates against a simple in-memory TSDB to enable precise, responsive scaling decisions.

Example

- Create a K8s Cluster

For this demo, we will be using k3d that can create lightweight k8s clusters, but any k8s cluster will work. For installation of k3d, please consult k3d.io.

# create cluster and expose internal (NodePort) 31198 as port on host - 8080k3d cluster create metric-push -p "8080:31198@server:0"- Prepare Helm Chart Repos

We will be installing three Helm releases so we need to add the repositories first.

helm repo add open-telemetry https://open-telemetry.github.io/opentelemetry-helm-chartshelm repo add kedify https://kedify.github.io/chartshelm repo update open-telemetry kedify- Install Demo Application

The demo application is a microservice based e-commerce that can be found here.

Architecture: https://opentelemetry.io/docs/demo/architecture/

helm upgrade -i my-otel-demo open-telemetry/opentelemetry-demo -f https://raw.githubusercontent.com/kedify/otel-add-on/refs/heads/main/examples/metric-push/opentelemetry-demo-values.yaml# and check if the app is runningopen http://localhost:8080- Install the Addon

helm upgrade -i kedify-otel oci://ghcr.io/kedify/charts/otel-add-on --version=v0.0.4 -f https://raw.githubusercontent.com/kedify/otel-add-on/refs/heads/main/examples/metric-push/scaler-only-push-values.yamlIn this step we are installing the otel-add-on using the helm chart. The helm chart can also install yet another OTEL collector that can be used for final metric processing before reaching

the otel-add-on. On the diagram that would be the blue box next to the otel-add-on (red) box. The demo application, however, deploys it’s own collector so we can hook to this one.

This connection was configured in the helm chart values for the previous step:

opentelemetry-collector: config: exporters: otlp/keda: endpoint: keda-otel-scaler:4317 tls: insecure: true service: pipelines: metrics: exporters: [otlp/keda, debug]- Install KEDA by Kedify

helm upgrade -i keda kedify/keda --namespace keda --create-namespace --version v2.16.0-1- Wait for All the Deployments

kubectl rollout status -n keda --timeout=300s deploy/keda-operatorkubectl rollout status -n keda --timeout=300s deploy/keda-operator-metrics-apiserverfor d in \ my-otel-demo-accountingservice \ my-otel-demo-checkoutservice \ my-otel-demo-frauddetectionservice \ my-otel-demo-frontend \ my-otel-demo-kafka \ my-otel-demo-loadgenerator \ my-otel-demo-valkey \ my-otel-demo-productcatalogservice \ my-otel-demo-otelcol \ my-otel-demo-shippingservice \ my-otel-demo-frontendproxy \ my-otel-demo-currencyservice \ my-otel-demo-adservice \ my-otel-demo-jaeger \ my-otel-demo-emailservice \ my-otel-demo-prometheus-server \ my-otel-demo-paymentservice \ my-otel-demo-recommendationservice \ my-otel-demo-imageprovider \ my-otel-demo-grafana \ my-otel-demo-cartservice \ my-otel-demo-quoteservice \ otel-add-on-scaler ; do kubectl rollout status --timeout=600s deploy/${d} done- Wait for All the Deployments

We will be scaling two microservices for this application, first let’s check what metrics are there in shipped:

We will be scaling two microservices for this application, first let’s check what metrics are there in shipped Grafana.

We will use following two metrics for scaling microservices recommendationservice and productcatalogservice.

app_frontend_requests_total{instance="0b38958c-f169-4a83-9adb-cf2c2830d61e", job="opentelemetry-demo/frontend", method="GET", status="200", target="/api/recommendations"}1824app_frontend_requests_total{instance="0b38958c-f169-4a83-9adb-cf2c2830d61e", job="opentelemetry-demo/frontend", method="GET", status="200", target="/api/products"}1027- Create ScaledObjects

Example for one of them:

apiVersion: keda.sh/v1alpha1kind: ScaledObjectmetadata: name: recommendationservicespec: scaleTargetRef: name: my-otel-demo-recommendationservice triggers: - type: kedify-otel metadata: scalerAddress: 'keda-otel-scaler.default.svc:4318' metricQuery: 'avg(app_frontend_requests{target=/api/recommendations, method=GET, status=200})' operationOverTime: 'rate' targetValue: '1' clampMax: '20' minReplicaCount: 1You can just apply both of them by running:

kubectl apply -f https://raw.githubusercontent.com/kedify/otel-add-on/refs/heads/main/examples/metric-push/sos.yamlThe demo application contains a load generator that can be further tweaked on http://localhost:8080/loadgen/,endpoint and by

default, creates some traffic in the eshop. However, it’s too slow, so let’s create more noise using a tool called hey and

observe the effects of autoscaling:

# in one terminal:watch kubectl get deploy my-otel-demo-recommendationservice my-otel-demo-productcatalogservicethen in another terminal(s):

hey http://localhost:8080/api/productshey http://localhost:8080/api/recommendations- Explore Metrics Otel Add On Exposes

We can also check the internal metrics of the otel-add-on. This can be useful for debug purposes when logs are not enough.

kubectl port-forward svc/keda-otel-scaler 8282:8080curl -s localhost:8282/metricsAmong other, it exposes the last value sent to KEDA, number of writes and reads to/from the TSDB, common golang metrics, etc.

- Clean Up

# delete the k3d clusterk3d cluster delete metric-pushConclusion

In the demo we used the metrics coming from two different microservices and scaled them horizontally based on the rate how quickly the counters were increasing. We used the number of incoming http request, but any thinkable metric that application exposes would work here.

Using OTEL’s metrics directly within KEDA provides a few notable advantages over the traditional Prometheus scaler:

- No Need for Prometheus Server: This approach skips the deployment of a Prometheus server, simplifying the setup and reducing resource usage.

- Faster Response Times: The push-based model allows metrics to reach KEDA almost instantaneously, avoiding delays associated with scrape intervals and enabling quicker scaling reactions.

- Efficient Scaling for Variable Loads: This setup is well-suited for environments with high variability or latency-sensitive workloads, where faster scaling can improve performance.

- Flexible Integration Options: The OTEL ecosystem supports multiple protocols and integration paths, making it easier to fit into a variety of observability setups without additional configuration overhead.

If you’re interested in more examples of this setup in action, feel free to explore: https://github.com/kedify/otel-add-on/tree/main/examples