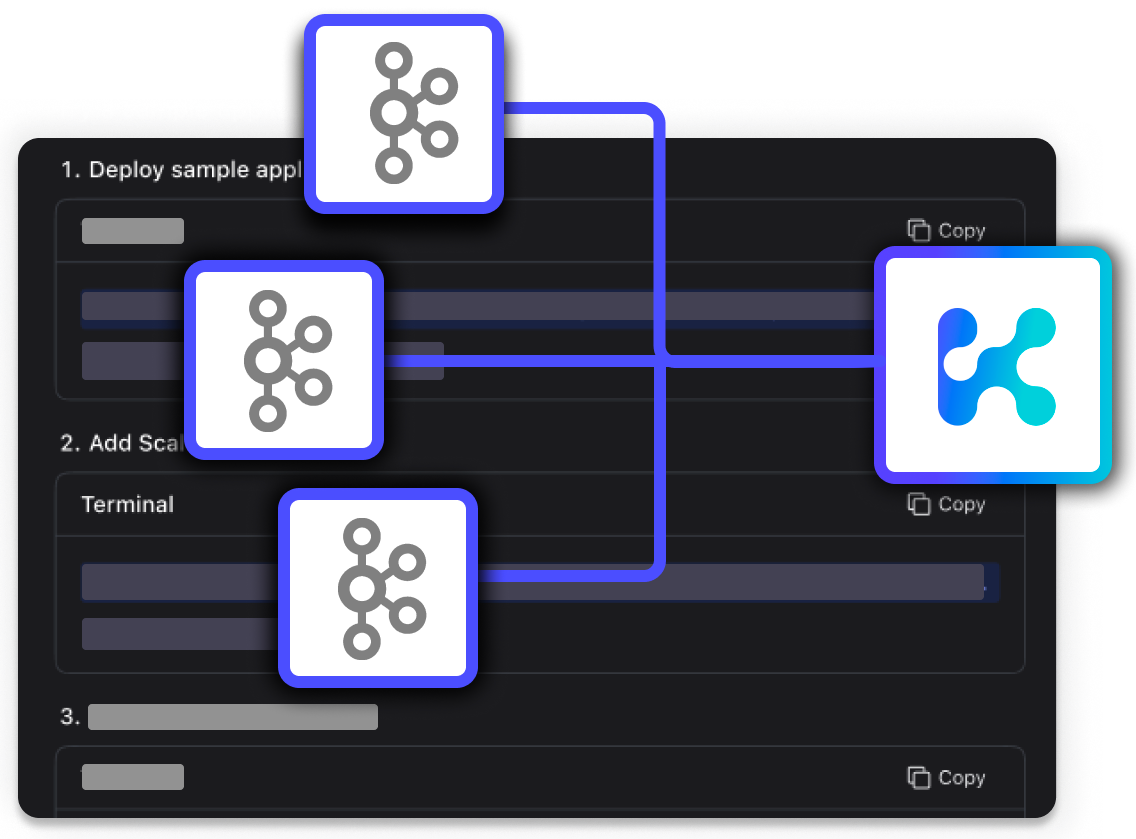

Use Apache Kafka metrics to trigger autoscaling with Kedify and KEDA

Apache Kafka is a distributed streaming platform that lets you publish, subscribe to, and process streams in real-time

Book demo

It's designed for high throughput and scalability, it is ideal for handling data feeds with high velocity and volume in fault-tolerant ways across distributed systems or applications.

Featured Use Cases

Scenario:

Organizations must process logs to ensure compliance with data protection regulations. These logs require specific handling or transformation, such as anonymization or encryption, at regular intervals.

Apache Kafka Scaler Usage:

Kafka collects and stores logs from various systems, aggregating them for compliance checks and transformations.

KEDA Usage:

KEDA triggers batch jobs to process sensitive log data, using Kafka lag to determine when to scale up processing capabilities. This ensures that logs are processed in compliance with legal time frames.

Get Started

apiVersion: keda.sh/v1alpha1

kind: ScaledJob

metadata:

name: compliance-log-processing-job

namespace: default

spec:

jobTargetRef:

template:

spec:

containers:

- name: log-compliance-processor

image: compliance-processor:latest

restartPolicy: Never

pollingInterval: 600 # Every 10 minutes

successfulJobsHistoryLimit: 2

failedJobsHistoryLimit: 3

maxReplicaCount: 5

triggers:

- type: kafka

metadata:

bootstrapServers: kafka.svc:9092

consumerGroup: compliance-log-group

topic: compliance-log-topic

lagThreshold: '50'