Envoy HTTP Scaler

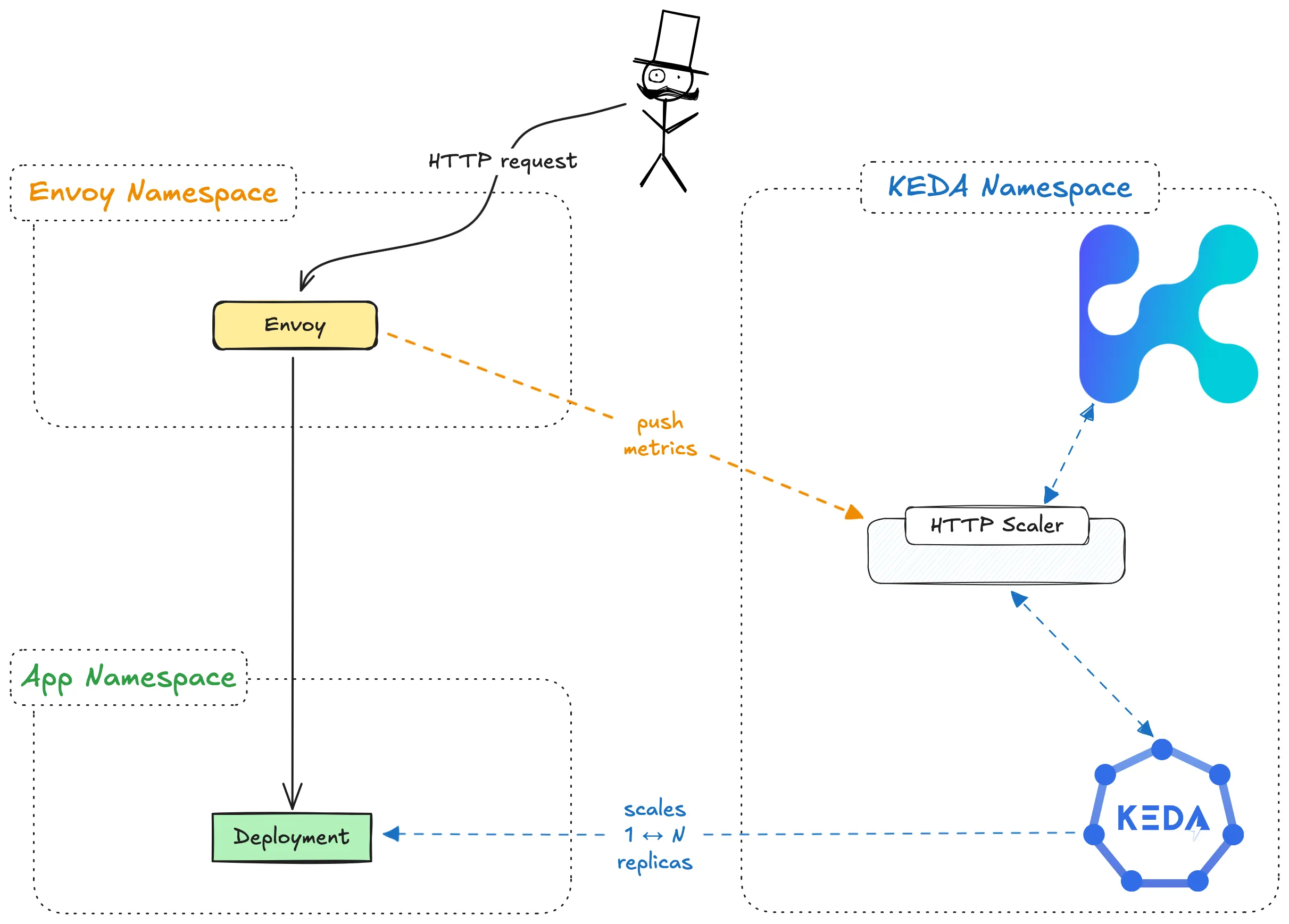

Kedify Envoy HTTP Scaler ensures that your service scales based on incoming HTTP requests using a custom Envoy proxy. To use this scaler, an existing Envoy proxy is required in your environment and needs to be configured to send metrics to Kedify Scaler.

The kedify-envoy-http scaler is designed specifically for ScaledObject resources to enable scaling based on incoming HTTP traffic using a custom Envoy proxy. Unlike kedify-http, it does not support scaling to zero, as Envoy cannot hold traffic during scale-to-zero scenarios. The scaler monitors traffic using the custom Envoy proxy and routes traffic accordingly.

With this scaler, users can define specific metrics, such as request rate or concurrency, to determine the scaling needs of the application, ensuring optimal performance and resource utilization.

This specification describes the kedify-envoy-http trigger, which scales workloads based on incoming HTTP traffic using a custom Envoy proxy.

Here is an example of trigger configuration using the Kedify Envoy HTTP scaler:

triggers: - type: kedify-envoy-http metadata: scalingMetric: requestRate # or concurrency targetValue: '10' granularity: '1s' window: '1m0s' externalProxyMetricKey: 'my_app_com' # <-- this should match an [envoy_cluster_name]scalingMetric: Metric used for scaling, which can be eitherrequestRateorconcurrency.targetValue: Target value for the scaling metric. When incoming traffic meets or exceeds this value, KEDA will scale out the deployment. (Default:100)granularity: The granularity at which the request rate is measured. For example, “1s” means one second. (Only forrequestRate, Default:1s)window: The window over which the request rate is averaged. For example, “1m0s” means one minute. (Only forrequestRate, Default:1m)externalProxyMetricKey: Matching external metric name, used for aggregating metrics from the Envoy proxy (e.g., specificcluster_namefor Envoy).

Example ScaledObject with Kedify Envoy HTTP Trigger

Section titled “Example ScaledObject with Kedify Envoy HTTP Trigger”Here is a full example of a ScaledObject definition using the Kedify Envoy HTTP trigger:

apiVersion: keda.sh/v1alpha1kind: ScaledObjectmetadata: name: app-envoyspec: scaleTargetRef: apiVersion: apps/v1 kind: Deployment name: app-1 cooldownPeriod: 5 minReplicaCount: 1 maxReplicaCount: 10 triggers: - type: kedify-envoy-http metadata: scalingMetric: requestRate targetValue: '10' granularity: '1s' window: '1m0s' externalProxyMetricKey: 'my_app_com'The Kedify Envoy HTTP Scaler uses Envoy to route traffic and collect metrics for applications to improve reliability and performance. This setup prevents situations where the interceptor may become a bottleneck. Standard reverse proxies, such as Envoy, nginx, or HAProxy, are better equipped to handle such conditions.

To route application traffic through a custom Envoy and enable it to flush metrics to KEDA for scaling, add the following configuration snippet within your Envoy fleet. This configuration ensures that metrics are pushed to the interceptor every second, complete with all necessary labels and values for HTTP-based scaling.

stats_flush_interval: 1sstats_sinks: - name: kedify_metrics_sink typed_config: '@type': type.googleapis.com/envoy.config.metrics.v3.MetricsServiceConfig transport_api_version: V3 report_counters_as_deltas: true emit_tags_as_labels: true grpc_service: envoy_grpc: cluster_name: kedify_metrics_service retry_policy: retry_back_off: base_interval: 0.5s max_interval: 5s num_retries: 100 retry_on: connect-failureAlso, add the kedify_metrics_service cluster to your static_resources:

static_resources: clusters: - name: kedify_metrics_service connect_timeout: 2s type: STRICT_DNS lb_policy: ROUND_ROBIN typed_extension_protocol_options: envoy.extensions.upstreams.http.v3.HttpProtocolOptions: '@type': type.googleapis.com/envoy.extensions.upstreams.http.v3.HttpProtocolOptions explicit_http_config: http2_protocol_options: {} load_assignment: cluster_name: kedify_metrics_service endpoints: - lb_endpoints: - endpoint: address: socket_address: address: keda-add-ons-http-interceptor-kedify-proxy-metric-sink.keda port_value: 9901This utilizes the stats_sink extension, implementing the V3 gRPC MetricsService. For each scaled application where these Envoy metrics should be aggregated with the internal interceptor metrics, you should configure externalProxyMetricKey in the trigger metadata:

triggers: - type: kedify-envoy-http metadata: scalingMetric: requestRate targetValue: '10' granularity: '1s' window: '1m0s' externalProxyMetricKey: 'my_app_com'Here, my_app_com refers to the upstream cluster_name from the Envoy configuration used to route the traffic to the desired application. Depending on your Envoy config, the section may look similar to this:

static_resources: listeners: - filter_chains: - filters: - name: envoy.filters.network.http_connection_manager typed_config: '@type': type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager route_config: virtual_hosts: - domains: ['www.my-app.com'] routes: - match: prefix: '/' route: cluster: my_app_com # <-- cluster_name from virtual_host auto_host_rewrite: false clusters: - name: my_app_com # <-- matching cluster_name upstreamThe two Envoy metrics that are ingested and processed are:

cluster.upstream_rq_total- for the request rate scaling metriccluster.upstream_rq_active- for the concurrency scaling metric