Kedify Achieves SOC 2 Type II Certification!

Learn more about our commitment to security

Autoscaling Boundaries: Optimize Shared Resources Utilization

by Jan Wozniak, Kedify

April 24, 2025

Introduction

In a cloud environment, it is crucial to manage resources efficiently and elastically as your demand changes. Luckily, there are tools available that can get you pretty good results with autoscaling already, for example, KEDA. However, in a scenario where you need to scale multiple applications in parallel but with strong shared boundaries, getting the best results out of your infrastructure can be challenging. That is why Kedify has introduced a new feature called ScalingGroups that helps you optimize resource utilization in non-trivial deployments. Whether you’re running a small cluster or large-scale operations, ScalingGroups can help you ensure that your resources are used effectively without overprovisioning.

In this blog post, we’ll explore what ScalingGroups are, how they work, and how you can use them to manage your restricted Kubernetes workloads more efficiently.

What Are ScalingGroups?

A brand-new CRD, ScalingGroups, introduces a feature in Kedify that allows you to set a shared capacity limit for a group of ScaledObjects. Typically, a ScaledObject defines how a particular application should scale based on certain metrics. You can set a limit on how many replicas of that particular application you’d like to allow to prevent overprovisioning. But what if you have multiple applications that scale independently but utilize the same resource? You could set the total limit for each ScaledObject, but then you risk running into overprovisioning. Alternatively, you can set a portion of the limit to each ScaledObject, but then your resource might be frequently underutilized. With ScalingGroups, you can enforce a limit on the total number of replicas that a group of ScaledObjects can scale to and allow Kedify to figure out the allocations dynamically, ensuring that your cluster resources are used efficiently and within the global limits of your infrastructure.

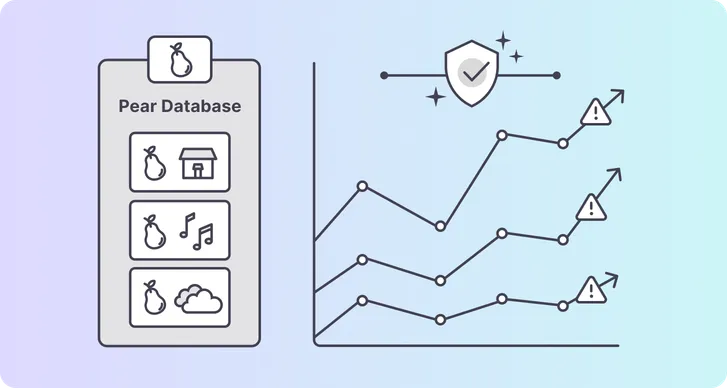

For example, imagine you run a business—let’s call it Pear Inc. There are three core services for your business:

- PearStore - where your customers download various apps

- PearTunes - where your customers can listen to great music

- PearCloud - where your customers may store their precious photos

All three services scale based on current usage and customer interactions, and they also all connect to the same instance of PearDB, where they store various data. This database allows at most 15 concurrent connections across all services. It doesn’t care whether it’s a replica of PearStore or PearCloud that is connecting; it simply can’t allow 16 concurrent connections.

Any resemblance to a certain fruit-themed tech giant is purely coincidental.

If not overloading PearDB is the most important thing, you may want to create three ScaledObjects, all with maxReplicaCount=5. But you will be missing out on available capacity during the launch of an exciting new game on PearStore. Everyone wants to play it, and no one is listening to music or taking any photos. But since you capped PearStore at 5 replicas, it won’t be able to scale past that and keep up with demand despite PearDB having available capacity.

The graph above visualizes this situation. While the load on PearCloud and PearTunes remains stable and low, the spike in traffic for PearStore results in a steep scale-out to 5, with a desire to scale even higher based on measured customer activity. This can result in frustrated customers as PearStore struggles to process requests for app downloads, and your cautious scaling limit becomes the bottleneck.

You could take a chance by configuring maxReplicaCount=15 on all ScaledObjects, but then you risk crashing your PearDB when a Grammy Award-winning album hits your PearTunes at the same time as all of your PearCloud users just witnessed a life event that must be recorded and shared with all of their family members.

There is a dangerous territory during scale-up when demand peaks for multiple services at the same time, and the total scale goes above 15. In the best-case scenario, some instances of your backend services fail to connect to PearDB, so error rates spike across all of them moderately. A more serious situation could be when one of your services manages to capture a large portion of the PearDB connections to a point where your other services become unavailable.

Adding ScalingGroups to your autoscaling workflow ensures only those green connections would be established in the right distribution and quantity.

How Does It Work?

ScalingGroups work by grouping ScaledObjects together using a label selector. Each ScaledObject that matches the selector becomes part of the ScalingGroup. Kedify then monitors the desired replica count for each ScaledObject in the group and ensures that the total number of replicas across the group does not exceed the specified capacity.

apiVersion: keda.kedify.io/v1alpha1kind: ScalingGroupmetadata: name: pear-dbspec: capacity: 15 selector: matchLabels: scaling-group: pear-dbIf the total desired replicas exceed the group’s capacity, Kedify will cap the metrics for the ScaledObjects in the group, preventing them from scaling beyond the limit. This ensures that your cluster resources are used efficiently and that no single application can monopolize the available resources, starving others. Each ScaledObject will be ensured to have its minimal quota defined in minReplicaCount. You can set each maxReplicaCount to a desired quota—this could even be the global capacity of 15—and let Kedify dynamically figure out the right allocation for each application.

An example of a capped group, where all three services face scaling backpressure from PearDB can look similar to this:

apiVersion: keda.kedify.io/v1alpha1kind: ScalingGroupmetadata: finalizers: - keda.kedify.io/scalinggroup generation: 2 name: pear-db namespace: defaultspec: capacity: 15 selector: matchLabels: scaling-group: pear-dbstatus: memberCount: 3 # how many ScaledObjects belong to the group residualCapacity: 0 # capacity available to allocate for scale-out scaledObjects: # scaling status for individual members pear-store: hpaDesiredReplicaCount: 7 # how many replicas KEDA allows HPA to scale to cappedStatus: replicaCountBasedOnMetrics: 14 # what would be the replica count purely based on the metric replicaCountCapped: 7 # capped replica count pear-tunes: hpaDesiredReplicaCount: 3 cappedStatus: replicaCountBasedOnMetrics: 9 replicaCountCapped: 3 pear-cloud: hpaDesiredReplicaCount: 5 cappedStatus: replicaCountBasedOnMetrics: 6 replicaCountCapped: 5Without ScalingGroup, the total number of pods trying to connect to PearDB would be 14 + 9 + 6 = 29.

Similarly, the capping decisions based on ScalingGroup membership can be also observed on each individual ScaledObject as events

Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal MetricsCapped 14m sg-metrics-processor Metrics capped: [s0-workload-default(14 => 7)] due to ScalingGroup pear-dbConclusion

Autoscaling independent but related applications is tricky, especially when shared resources play an important role. Kedify offers an easy-to-use solution that can address the shortcomings of upstream KEDA. You can explore this with Kedify KEDA v2.17. Installing Kedify takes no more than 5 minutes, and then you can try ScalingGroups through one of our GitHub examples.