Kedify Achieves SOC 2 Type II Certification!

Learn more about our commitment to security

Scaling HTTP-triggered Azure Functions on Kubernetes with KEDA

by Zbynek Roubalik, Kedify

February 03, 2025

Introduction

The Azure Functions is a serverless compute solution that allows you to execute code in response to

various triggers without having to manage the underlying infrastructure. This

model boosts developer agility and scalability while reducing operational

overhead.

Do you consider migrating your Azure Functions to Kubernetes?

Running your Azure Functions on Kubernetes offers a unified platform for all your workloads. This integration streamlines deployments and simplifies management by consolidating serverless functions with your microservices. It also leverages Kubernetes’ robust scaling and orchestration capabilities, while enhancing observability and resource efficiency across your entire ecosystem.

Azure Function on Kubernetes

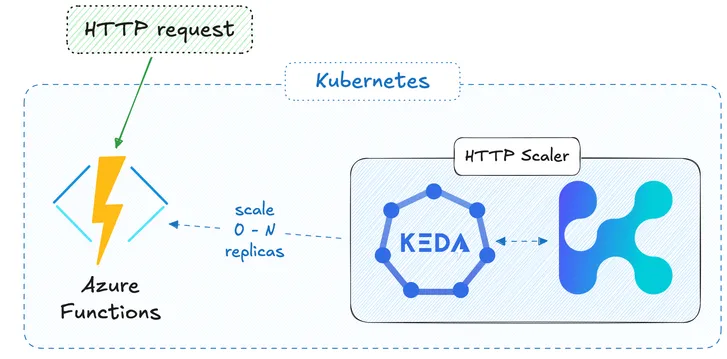

While Azure Functions triggered by RabbitMQ, Kafka, or various Azure services scale seamlessly on Kubernetes with KEDA, as noted in the Azure Functions documentation, HTTP-triggered autoscaling has often been trickier.

A Prometheus-based approach

can work, but it typically lacks near real-time response and cannot scale to zero.

In this blog post, we’ll demonstrate how to run a HTTP-triggered Azure Function in Kubernetes and enable instant, event-driven scaling (including scale to zero) by using Kedify’s HTTP scaler for KEDA.

Azure Function with Kedify in Action

Running Azure Functions on Kubernetes and scaling them based on HTTP traffic with Kedify is very straightforward.

Below is a quick, end-to-end guide using a lightweight k3d cluster, and Traefik Ingress

.

With minor adjustments, you can replicate this in any Kubernetes environment.

0. Prerequisites

Before you begin, ensure you have the following tools installed:

- k3d

or any favorite Kubernetes cluster

-

Azure Functions Core Tools

- yq

for in place YAML editing

- curl

& hey

for testing

1. Create a k3d Cluster & Install Kedify KEDA

- Let’s create a local Kubernetes cluster and expose HTTP/HTTPS ports via Traefik.

k3d cluster create mycluster --port "9080:80@loadbalancer" --port "9443:443@loadbalancer"- Install Kedify and KEDA, the easiest way is to use Kedify Quickstart

:

# Get your installation command from the Quickstart above!

kubectl apply -f 'https://api.kedify.io/onboarding/...'2. Initialize an Azure Function and Build the Image

In this step, we will initialize a sample Python Azure Function using the HTTP trigger template. However, you can adjust this to fit any function you want to build. The function is designed to respond to incoming HTTP requests, making it ideal for real-time applications.

We’re initializing the function with the --docker parameter, which will generate a Dockerfile for us automatically.

This allows us to easily build a Docker image locally. After this, we import the image directly into our k3d Kubernetes cluster, skipping any external registry. In a production setup, you’d upload the image into your registry of choice.

Perfect for a quick demo or local testing while keeping the process fully self-contained.

func init hello --python --dockercd hellofunc new --template "HTTP trigger" --name HelloFunctiondocker build -t hello-func .k3d image import hello-func3. Generate Kubernetes Manifests

In this step, we use the func kubernetes deploy command to generate the necessary Kubernetes resources for deploying our Azure Function. This includes:

- Deployment: Defines how the function’s container is deployed and managed.

- Service: Exposes the function inside the cluster so other services can communicate with it.

- Secrets & ConfigMaps: Stores environment variables and sensitive information if needed.

By default, this command would automatically deploy the function to the Kubernetes cluster.

However, since we are using an image that we imported locally, we only generate the resources first via --dry-run flag.

This allows us to review and modify the resources in the next step, before we actually deploy the function.

func kubernetes deploy --name hello --image-name hello-func --dry-run > hello-http.yaml4. Adjust the Deployment

Since we imported the function’s container image directly into our k3d cluster, Kubernetes should not attempt to pull it from an external registry. However, the default behavior of Kubernetes is to always pull images unless explicitly told otherwise.

To prevent Kubernetes from trying to fetch the image externally, we modify the generated hello-http.yaml file by setting the image pull policy to Never. This ensures that the function uses the locally imported image, avoiding unnecessary registry lookups.

yq eval 'with(select(.kind == "Deployment" and .metadata.name == "hello-http") | .spec.template.spec.containers[] | select(.name == "hello-http"); .imagePullPolicy = "Never")' -i hello-http.yaml5. Apply Resources

Let’s deploy the function into the cluster and verify that it is running correctly.

kubectl apply -f hello-http.yamlwatch kubectl get deployment hello-httpWe should see similar output with 1 replica ready:

Every 2,0s: kubectl get deployment hello-http

NAME READY UP-TO-DATE AVAILABLE AGEhello-http 1/1 1 1 25s6. Set Up an Ingress

Finally we need to make sure the function is reachable at host hello-http.keda by creating an Ingress resource pointing to the function’s Service hello-http.

cat <<EOF | kubectl apply -f -apiVersion: networking.k8s.io/v1kind: Ingressmetadata: name: hello-http-ingress annotations: traefik.ingress.kubernetes.io/router.entrypoints: webspec: rules: - host: hello.keda http: paths: - path: / pathType: Prefix backend: service: name: hello-http port: number: 80EOF7. Test the Function

Let’s test the function by sending an HTTP request to the Ingress endpoint.

Because we are running in a local cluster, we will target localhost:9080 with the Host header set to hello.keda.

curl -I -H "Host: hello.keda" http://localhost:9080We used curl with the -I flag so the output only show the response headers with the 200 OK status code.

HTTP/1.1 200 OKContent-Length: 149794Content-Type: text/htmlDate: Fri, 31 Jan 2025 13:09:14 GMTServer: KestrelIf we open the URL in a browser (with properly set Host header), we we can see the Azure Function running on our cluster:

8. Enable Autoscaling with KEDA and Kedify Scaler

Now that we have our function running, we can enable autoscaling with KEDA and Kedify’s HTTP scaler by applying following ScaledObject.

The function would scale between 0 and 10 replicas, based on the incoming HTTP request rate.

By setting the Kedify HTTP scaler’s targetValue: 10, KEDA will try to scale out the workload to handle approximately 10 requests per second in one replica.

cat <<EOF | kubectl apply -f -apiVersion: keda.sh/v1alpha1kind: ScaledObjectmetadata: name: hello-http-sospec: scaleTargetRef: name: hello-http initialCooldownPeriod: 10 cooldownPeriod: 10 minReplicaCount: 0 maxReplicaCount: 10 fallback: failureThreshold: 2 replicas: 1 advanced: restoreToOriginalReplicaCount: true horizontalPodAutoscalerConfig: behavior: scaleDown: stabilizationWindowSeconds: 5 triggers: - type: kedify-http metadata: hosts: hello.keda service: hello-http port: "80" scalingMetric: requestRate targetValue: "10" granularity: 1s window: 10sEOFLet’s check the deployment, run following command and keep it open for the next steps as well:

watch kubectl get deployment hello-httpBecause there’s no load - there aren’t any requests targeting our functions, we should see that after a few seconds the function is scaled to 0 replicas:

Every 2,0s: kubectl get deployment hello-http

NAME READY UP-TO-DATE AVAILABLE AGEhello-http 0/0 0 0 5m9. Scale from 0 to 1

Let’s keep the deployment watch running and and in another terminal send a request to the function:

curl -I -H "Host: hello.keda" http://localhost:9080After sending the request and after the initial cold start period, we can see the function scales up to 1 replica:

The length of the initial cold start period depends on the function’s runtime, image size and Kubernetes configruation - how quickly is is able to start a new pod.

10. High Traffic Burst

To simulate a high traffic burst, we can use the hey tool to send a large number of requests to the function:

hey -z 2m -c 50 -host "hello.keda" http://localhost:9080Watch for the function’s replicas to increase and up to the maximum (10 replicas), after traffic decreases, reduce back toward zero.

Every 2,0s: kubectl get deployment hello-http

NAME READY UP-TO-DATE AVAILABLE AGEhello-http 10/10 10 10 10m

...

hello-http 0/0 0 0 15mConclusion

In this example, we demonstrated how to unify Azure Functions with your Kubernetes workloads, delivering a truly serverless experience with instant scale-out and scale-to-zero capabilities. Compared to Prometheus-based approaches, Kedify’s scaler reacts in near real-time without the delays of external metrics scraping, ensuring that your functions scale exactly when needed.

By combining KEDA and Kedify’s HTTP scaler, you can integrate Azure Functions with your Kubernetes infrastructure seamlessly—streamlining deployments, reducing complexity, and leveraging your existing monitoring and logging stacks for better visibility.

If you’re considering migrating from Azure Functions, AWS Lambda, Google Cloud Run, or any other managed offering, reach out to us to learn how we can help you transform your architecture.