Kedify Achieves SOC 2 Type II Certification!

Learn more about our commitment to security

Announcing Kedify's Public Beta Launch: Simplifying KEDA-Powered Autoscaling

by Rod Ebrahimi & Zbynek Roubalik

April 30, 2024

Update: Welcome HN readers! Please take a moment to try our free beta, it only takes ~90 seconds and we are eager to hear your feedback.

We’re pleased to announce the beta launch of Kedify’s SaaS-based Kubernetes event-driven autoscaling service. With this initial beta release, we are building upon KEDA’s open-source core and recent CNCF recognition, with a managed service that simplifies Kubernetes autoscaling for any type of workload.

Our mission with this and future releases is to deliver an autoscaling service that not only controls infrastructure costs but also allows your team to focus on creating applications without worrying about the complexities of custom workload autoscaling or cluster workload performance hacks; Kedify will simplify your cloud-native autoscaling and is not dependent on any specific cloud provider.

With this release, your team can:

— Install the latest version of KEDA using hardened images with no CVEs in seconds

— Manage KEDA installations across many clusters and cloud providers

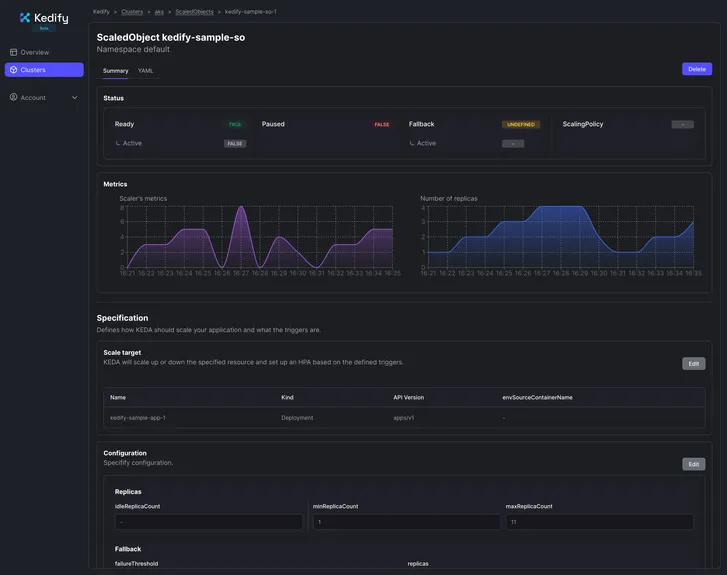

— Monitor autoscaling on any type of workload

— Specify role-based access controls for KEDA to help reduce the scope of privileged access in enterprise environments

— Get resource and configuration recommendations (available later this week)

— Use cron to define dynamic autoscaling policies (available later this week)

— Implement HTTP-based autoscaling (available soon)

— Try Kedify now for free

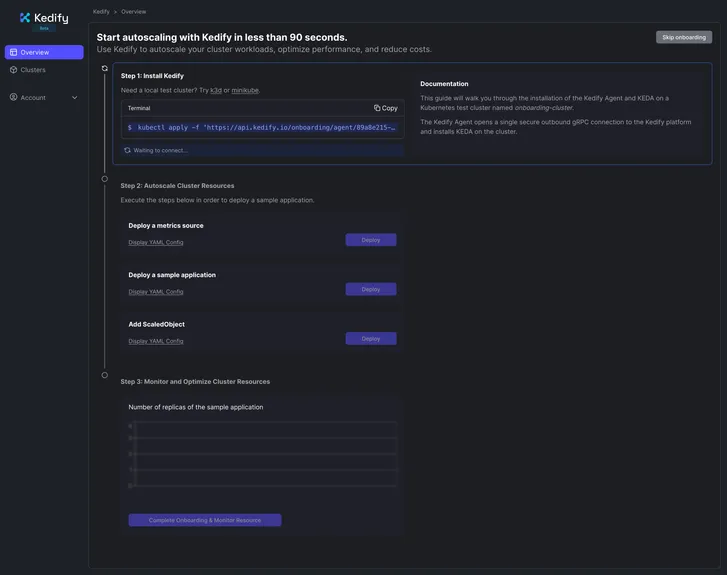

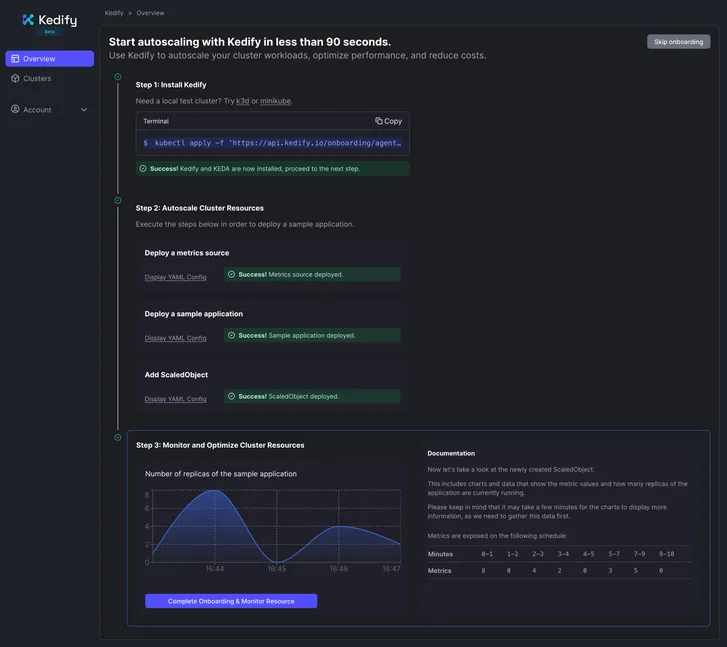

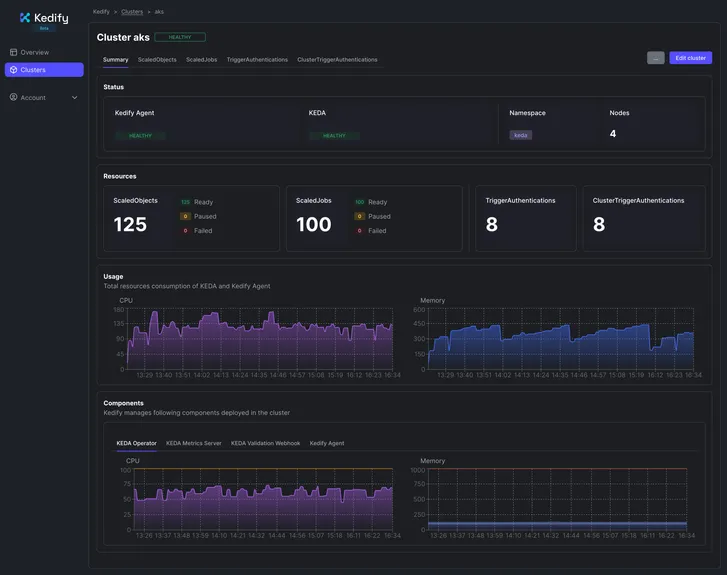

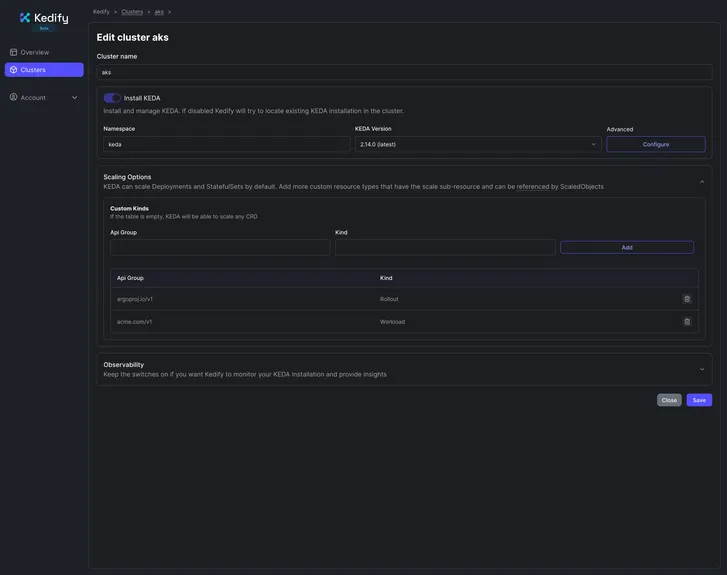

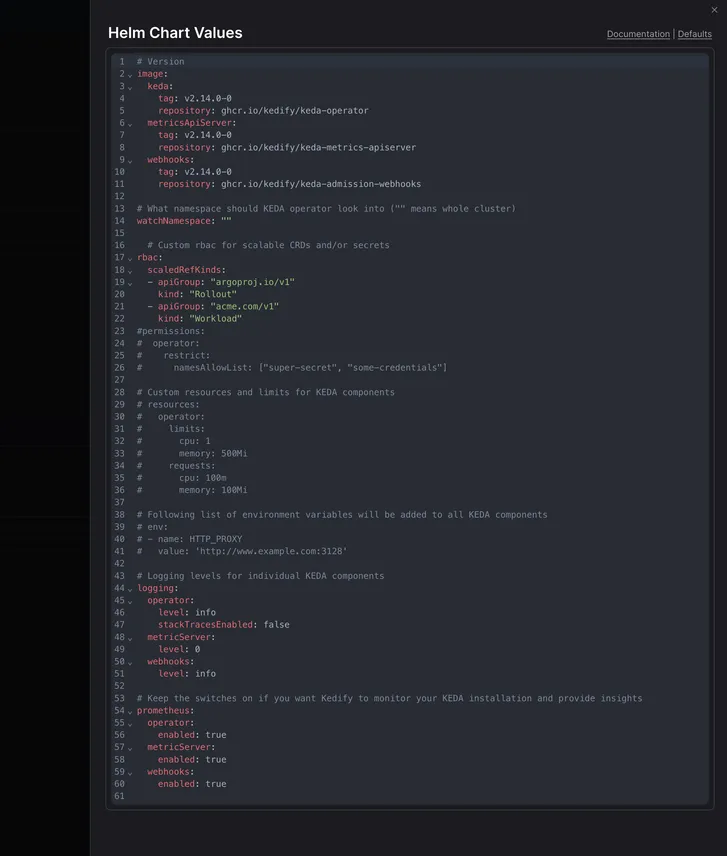

Streamlined KEDA Installations & Configuration: Get up and running quickly with simplified KEDA installations, rely on our Kedify agent to maintain hardened KEDA implementations that can be configured to update automatically. We’ve spent significant time making the initial configuration and management of KEDA more straightforward using the new dashboard.

Explore our sample application during onboarding or add an existing cluster and install the latest KEDA in less than 90 seconds.

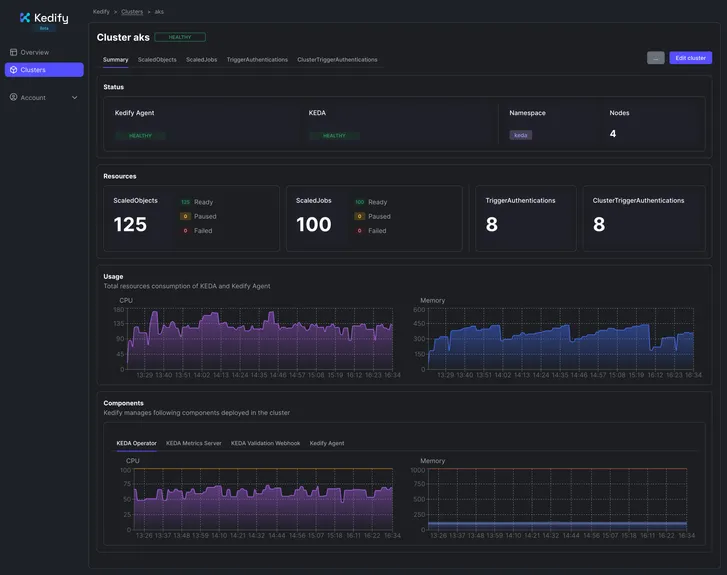

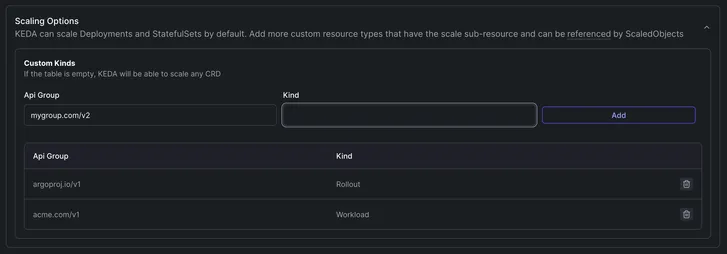

Built-in Multi-Cluster Support & Enhanced Resource Observability: Easily manage KEDA across clusters and cloud providers from a single place. Our new dashboard also offers a comprehensive view of your application workloads including cluster resource utilizations. You can also easily implement one or more of the existing 65+ open source KEDA scalers including but not limited to Apache Kafka, Prometheus and Redis.

Precision Event-Driven Scaling Without Cloud Provider Constraints: Use Custom Resource Definitions (CRDs) for precise autoscaling configurations tailored to your specific workload requirements and event trigger sources - all through an intuitive web-based interface. Avoid the restrictions of and costs associated with “black box” cloud provider-specific autoscaling offerings.

Specify & Implement Role-based Access Controls for KEDA: Use Kedify to limit how KEDA accesses and uses cluster resources with RBAC controls.

Transparent Pricing & Professional Enterprise-level Support: Benefit from our straightforward cluster-based pricing and expert support model, designed for businesses and teams of any size who want to take full advantage of what KEDA has to offer.

Collaborative Development: Your feedback is crucial during this beta phase. We are committed to rapid iteration and addressing your team’s unique autoscaling needs. Please send us your feedback, we are looking forward to being in touch!